Use Cases

Automotive ADAS & AD simulation

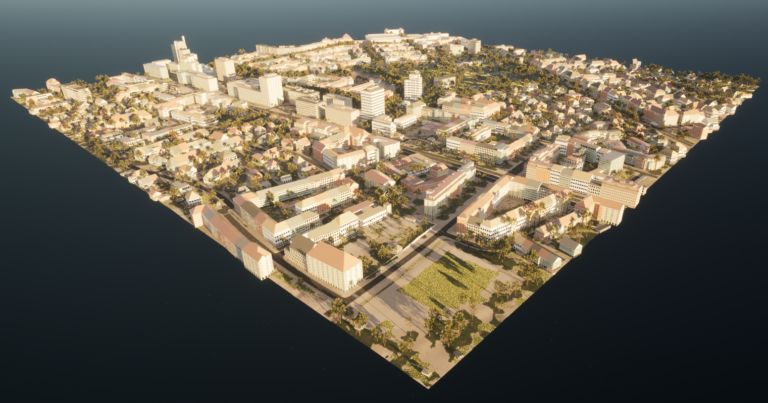

Creating real-world scenes at scale

We offer 3D world twins as environment models for simulation of advanced driver assistance systems (ADAS) and autonomous driving (AD) systems. Reconstructed from real-world geodata with our AI and 3D technology.

Our 3D world twins are ready to be integrated and imported as static scene layers in several simulation and rendering tools:

- Unreal Engine 4 & 5

- dSPACE AURELION

- CARLA

- IPG CarMaker 12

- MathWorks RoadRunner

We support ASAM OpenDRIVE and every 3D world twin is generated in sync with an existing HD-map.

Our 3D world twin technology brings several advantages for your sensor and perception simulation:

- Rapidly receive your 3D world ready for simulation

- Easily create infinite variations of the 3D scene

- Apply and vary materials of all objects for physical sensor simulation

- Directly receive ground truth information from semantic 3D models

With our rapid generation of 3D world twins we enable you to simulate at scale, reduce cost and accelerate time to market of your ADAS and AD solution.

Let’s define your area of interest and you will rapidly receive your 3D environment ready for simulation!

Variations for billions of miles

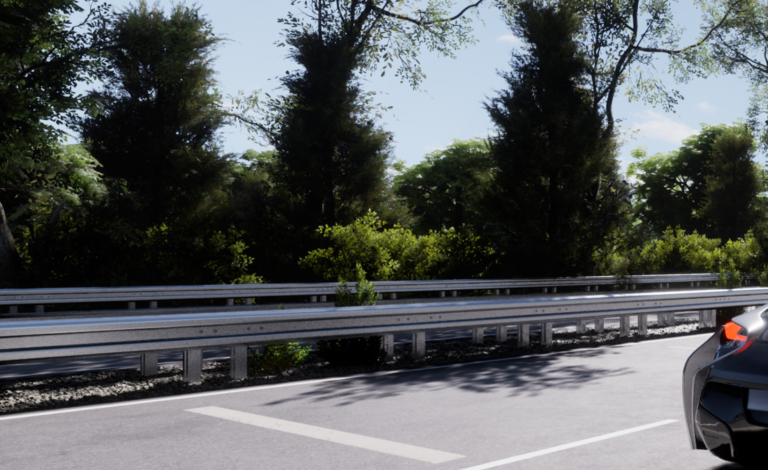

Rapidly create infinite variations of the 3D scene

Our 3D technology allows us to generate infinite numbers of variations of a single scene.

We enable you to simulate your ADAS and AD stack in a large variety of synthetic environments which are rapidly generated.

This increases your synthetic data diversity, enables you to run brute force testing and ultimately increases your perception robustness.

We are here to support you towards a billion miles in simulation and testing – Unlocking the full potential of virtual vehicle development.

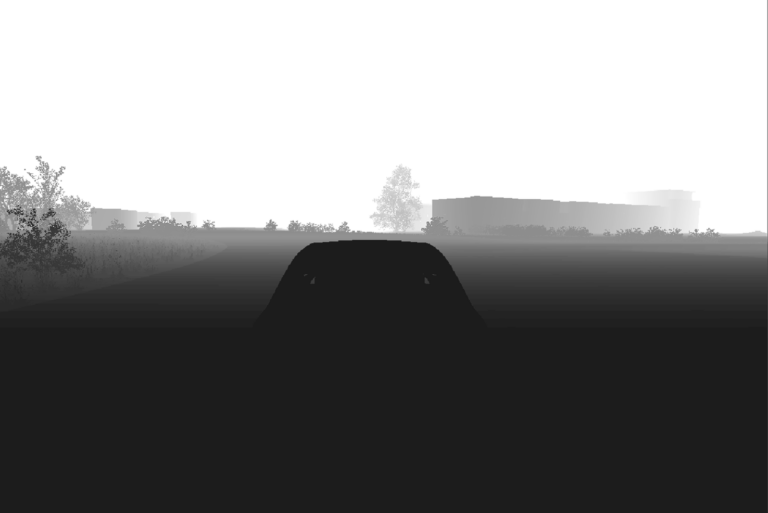

PBR Materials for sensor simulation

3D scenes optimized for physical sensor-realism

As our 3D world twins are object-based reconstructions, we are able to support physically based rendering (PBR) of materials for all objects in the scene.

We enable you to simulate your visual sensor systems at scale as all objects are reflecting and absorbing light waves based on realistic material properties.

We are here to support your simulation and testing of virtual camera, radar and lidar systems in our photo- and sensor-realistic 3D world twins.

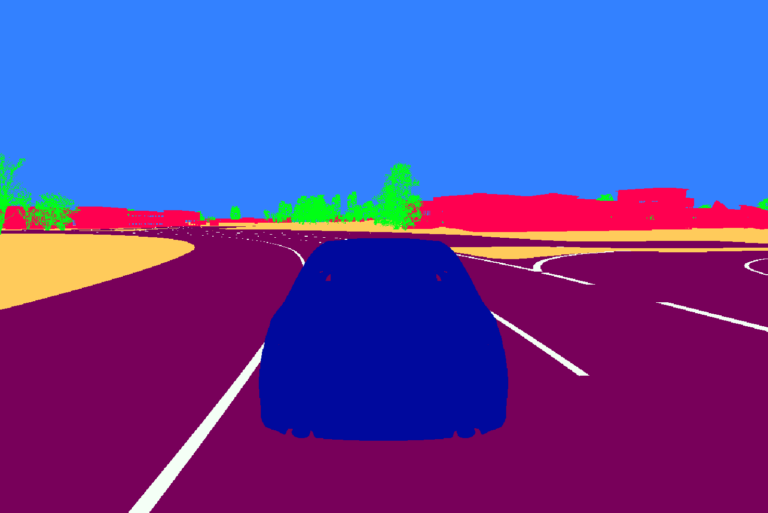

Enabling Ground truth data

Accurate synthetic training data at scale

As our 3D world twins are object-based, semantic reconstructions, every object in the scene is classified and can thus be exported as ground truth information.

With our procedural 3D scene generation we enable you to rapidly create large amounts of synthetic training data for your perception algorithms.

We are here to support your AI development with perfect ground truth in e.g. semantic segmentation or depth maps.

Coming soon

We are currently working on truely scalable ADAS and AD simulation environments by solving two more things:

- Leveraging our AI technology to create simulation-grade ASAM OpenDRIVE maps at scale, derived from globally available data sources.

- Adding streaming capabilities to our 3D technology to enable seamless, virtual long distance drives in our real-world duplicating 3D world twins.

Other Use Cases:

We are coming from automotive, but we are not limited to that!

Our 3D world twin technology can be applied in several other industries.

Rapidly creating 3D content from real-world location data can unlock the next level of your own use case? We are happy to support you with a 3D world twin, based on your area for your specific use case.

Get your sample data now!

Do you want to experience and explore our virtual 3D world twins in your pipeline?

Check out the sample data from our current beta stage!